In my previous post, which was a review of the book Applied Cryptography and Cryptography Engineering, I wrote that DES, in spite of retiring officially (after numerous successful attacks), is still a great algorithm from an academic perspective to learn and peek into the minds of cryptographers. In this one, I’ll try to explain DES in my own words, testing my own understanding and giving you a (hopefully) nice read from a novice perspective. So let’s get started with DES, a cipher that was once the standard for government data encryption for the US and many others around the globe, now defunct and only exists, if at all, in form of 3DES.

Before we begin, let us understand where we are (with DES) in the cryptoverse and then talk about DES itself. In cryptography, encryption of data can happen in two ways. There’s symmetric cryptography, and there’s asymmetric cryptography. In symmetric key cryptography, we have block ciphers and stream ciphers. DES is a block cipher.

A Brief History

DES, for Data Encryption Standard, is a symmetric key encryption algorithm proposed by IBM with inputs from the NSA in 1974. Not many details about the development process were shared with the world. It is studied by numerous experts and counts as one of the most studied ciphers of all time. DES was designed to keep government secrets, secrets.

The 56 bit key size didn’t impress anyone back in the day, much less today. In spite of a small key size, there weren’t any attacks faster than brute force, both theoretically and practically, until into the late 80s when Adi Shamir and Eli Biham discovered a new kind of attack on block ciphers called differential cryptanalysis. The world then learnt that NSA and IBM knew about this attack since at least 1974, and the algorithm was designed specifically to counter this attack.

In late 90s DES was practically cracked in a contest and then many times after that. The main weakness in DES was the small key size, and to patch it, 3DES was proposed which is still used today, although not recommended. But from an academic point of view, DES is a gold mine. It is easy to understand, let’s us deep dive into the way cryptographers think and learn why certain decisions are made, and most importantly, why the math just works!

DES algorithm from 10,000ft

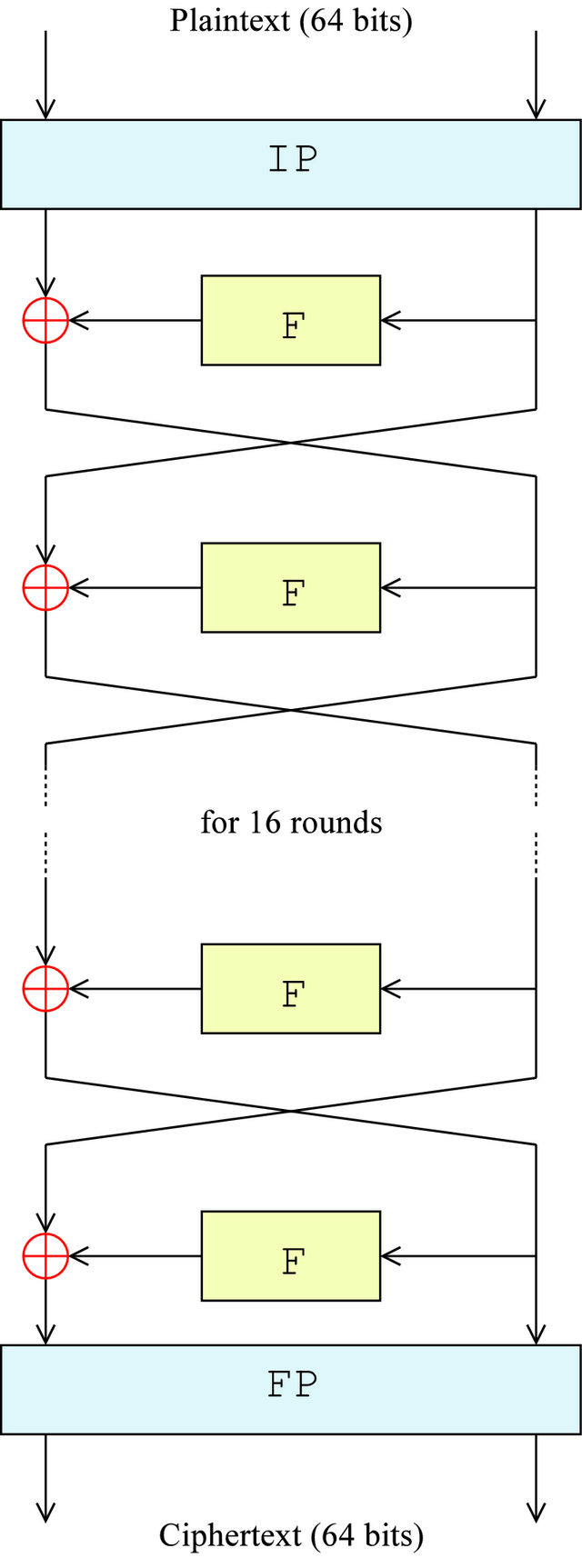

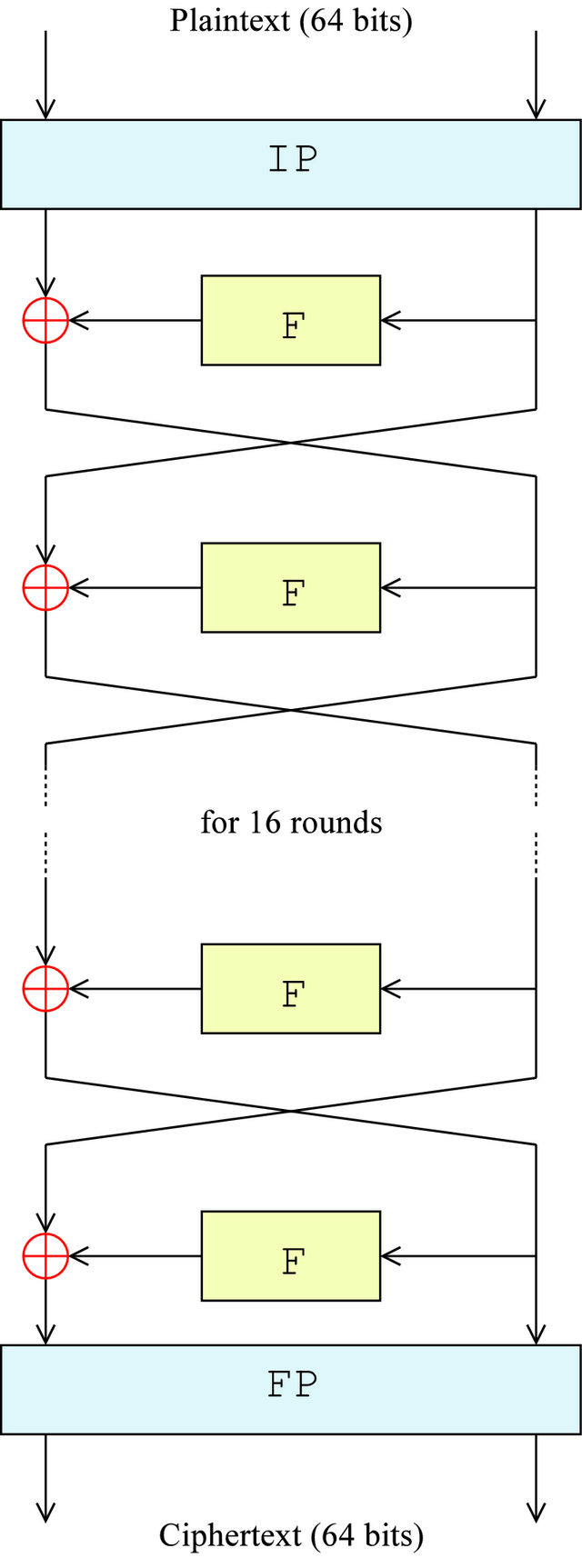

Okay, let’s start with a super complex diagram that you probably won’t understand without 4 years of formal training in mathematics. Just kidding.

And for the sake of my love for bullet points,

- DES is a Feistel cipher, which is a family of ciphers which are iterative in nature (repeat a simple set of instructions several times, called ’rounds’) and share many similar properties.

- DES has a block size of 64 bits, that is, 64 bits of plaintext is converted into 64 bits of ciphertext in one go.

- The algorithm makes use of a 64 bit key, 56 of which are used by the algorithm and 8 are used for parity check. Effective security is 56 bits.

- DES has 16 rounds.

- The encryption and decryption functions are almost similar, which is a great advantage as the implementation and audit has to be done for single function only, simplifying things.

So how does the algorithm work? Like any other algorithm, you can put it down as a list of easy to understand steps.

https://en.wikipedia.org/wiki/File:DES-main-network.png

- Take input as plaintext block of 64 bits, and key K

- Apply Initial Permutation (IP) on input plaintext (which shuffles the bits in a predefined manner)

- Split the input into left half and right half (L0 and R0) (form two equal halves of 32 bits, no tricks)

- Apply magic function F (not really) on the right half R0 (32 bits input => 32 bits output)

- Function F takes R0 and K1 as input, where R0 is the right halve (32 bit) input for the 1st round and K1 is the 1st round key. In this step, the key material mixes with the plaintext

- XOR output of F (32 bits) with L0 (which is already 32 bits), this is the new R1 (L0 ⊕ F(R0) => R1). R0 is simply copied to L1

- Thus, we’ve essentially swapped L0 and R0 with some pre-processing on R0. This completes our round 1. Repeat 4-5-6 16 times and you’ve done 16 rounds of DES.

- Apply reverse Initial Permutation (a.k.a. Final Permutation or IP-1) and you have your ciphertext. Tadaa!

Yes, I know, that was a mouthful, wasn’t it? This slide [link here] shows the round key Ki in action. Now that we have a basic flow, we can take on each of the components and talk about them in detail, in a proper top down approach.

Little aside on confusion and diffusion

Confusion and diffusion are exactly what they mean in plain English. They provide confusion and diffusion properties in the ciphertext. They are crucial for the overall security of the DES algorithm.

Confusion means having a non-linear, complex relationship between the key and the ciphertext. In simple words, each bit of the ciphertext has to depend on as many bits in the key as possible, such that even with a choosen ciphertext attack scenario, not much can be known about the key given a practically infinite supply of plaintext-ciphertext pairs.

Diffusion means any change in the plaintext should cause an avalanche/snowball effect and change around half of the bits in the ciphertext and vice versa.

We will talk more about how DES achieves both of these properties when we talk about the F function in detail.

DES algorithm: Major parts

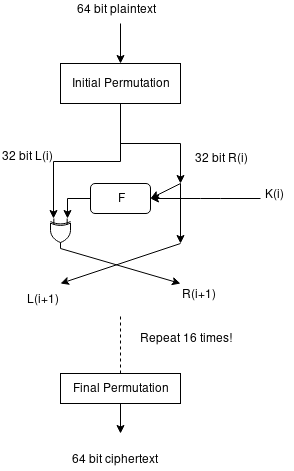

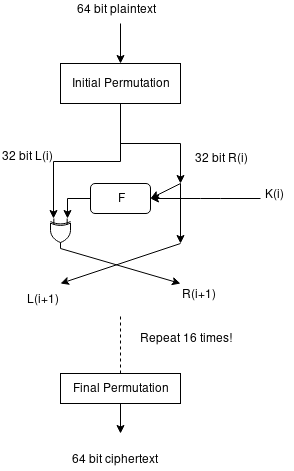

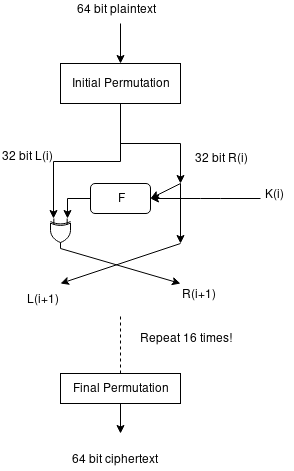

Please take a moment to appreciate the effort I’ve put into the diagram. Error: The K(i) should be K(i+1)

We have here the following major components to talk about.

- Initial permutation, final permutation

- Round key generator

- The round function F

Initial & Final Permutation (IP & FP)

The IP accepts the plaintext and the FP returns the ciphertext generated by the algorithm. In decryption, the ciphertext goes into the FP and plaintext leaves through IP, similar but exact opposite of encryption, which is one of the properties of a Feistel cipher. From functionality perspective, it shuffles the 64 bit input block according to a predefined vector, given below.

IP

58 50 42 34 26 18 10 2

60 52 44 36 28 20 12 4

62 54 46 38 30 22 14 6

64 56 48 40 32 24 16 8

57 49 41 33 25 17 9 1

59 51 43 35 27 19 11 3

61 53 45 37 29 21 13 5

63 55 47 39 31 23 15 7

The above text is a linear list, or a vector, and not a matrix. What it says is “take the 58th bit and connect it to output bit 1”, “take the 50th bit and connect it to output bit 2” and so on. It is basically a one-to-one substitution. So how does it, one might ask, help in adding security if the list is public and it is a simple substitution operation. Well, it does not. To quote wikipedia,

IP and FP have no cryptographic significance, but were included in order to facilitate loading blocks in and out of mid-1970s 8-bit based hardware.

Round Key generator

https://www.nagekar.com/wp-content/uploads/2020/12/key_generation.jpg

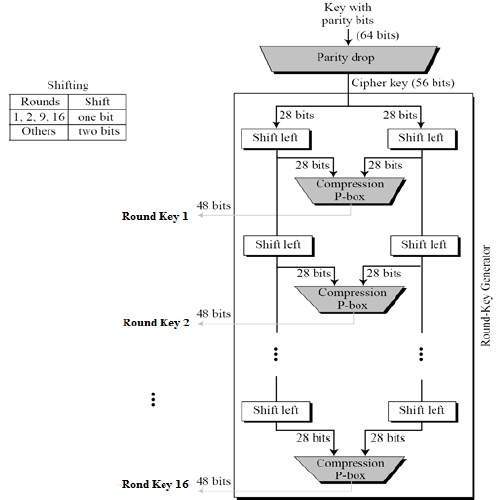

The round key generator function generates a key for each of the 16 rounds of DES. There are a couple of steps involved, as illustrated in the above visual.

- Permuted choice 1 (parity drop) – Get the permuted 56 bit key from the input 64 bit key by dropping the parity bits (bit 8, 16…64 are dropped). The permutation is done according to the predefined vector shown below.

PC-1

57 49 41 33 25 17 9

1 58 50 42 34 26 18

10 2 59 51 43 35 27

19 11 3 60 52 44 36

63 55 47 39 31 23 15

7 62 54 46 38 30 22

14 6 61 53 45 37 29

21 13 5 28 20 12 4

- Split the 56 bit key into two 28 bit halves, and left shift them either by one bit (for round 1, 2, 9 and 16) or by two bits (for every other round).

- Concatenate the two halves thus returned after left shifting, and apply the permutation table 2 to the concatenated pair.

PC-2

14 17 11 24 1 5

3 28 15 6 21 10

23 19 12 4 26 8

16 7 27 20 13 2

41 52 31 37 47 55

30 40 51 45 33 48

44 49 39 56 34 53

46 42 50 36 29 32

- Permuted choice 2 (compression p-box) – Takes a 56 bit key and returns a 48 bit round key Ki after dropping another 8 bits

- The 48 bit round key is then used by our magic function F (remember that?) to mix key into the plaintext by xoring the plaintext with this 48 bit key. (Wait, but our right input halve Ri is 32 bits, right? Yes, we’ll get to how our input is expanded to 48 bits in the next section)

Round Function

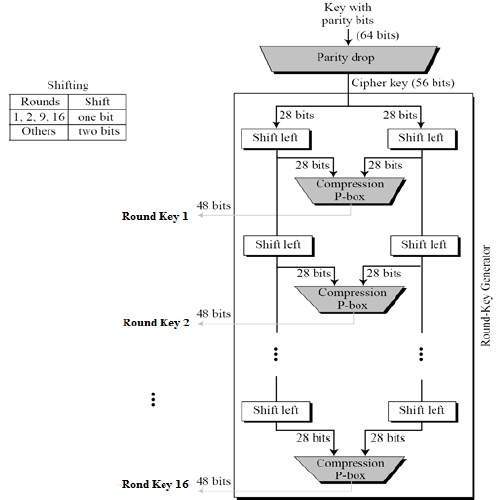

We’re finally into the meat of this beautiful algorithm. I’ve mentioned in brief about what the round function consists of. To reiterate,

- Split the input into left half and right half (Li and Ri) (form two equal halves of 32 bits, no tricks)

- Apply magic function F on the right half Ri-1 (F takes 32 bits input and gives 32 bits output), where Ri-1 is the right halve of the ith round and Ki is the ith round key. This is where the key material mixes with the plaintext.

- XOR output of F (32 bits) with Li-1 (which is already 32 bits), this is the new Ri (that is, Li-1 ⊕ F(Ri-1) => Ri). Unaltered Ri-1 is simply copied to Li

What we haven’t talked about is the magic function F itself. The magic function F isn’t really magical. It just does 4 neat sub-operations, and does them really well.

https://www.nagekar.com/wp-content/uploads/2020/12/Data_Encription_Standard_Flow_Diagram.svg

- Expansion function E

- XOR with round key

- S box substitution

- Permutation

Let’s look at them one by one and try to see where exactly they fit in and what cryptographic property they give to our ciphertext.

Expansion function

E BIT-SELECTION TABLE

32 1 2 3 4 5

4 5 6 7 8 9

8 9 10 11 12 13

12 13 14 15 16 17

16 17 18 19 20 21

20 21 22 23 24 25

24 25 26 27 28 29

28 29 30 31 32 1

As the name might have hinted, the expansion function expands our plaintext input. Expansion gives us diffusion. It diffuses the impact of change of one bit in the input across the block. Remember how the 32 bit Ri part of the 64 bit input is sent to the F function? E function takes those 32 bits of input and expands them to 48 bits. How it does that? Well, repetition, of course. So it basically takes input as 1 2 3 4 5 6 7 8 and outputs something like 1 2 2 3 4 4 5 6 6 7 8 8, effectively increasing the size by 50% (32 => 48).

XOR with round key

XOR is a simple mathematical operation that has a very important property from a cryptographic standpoint. If you XOR a number A with B, you get a new number C. To get A from C, you need B. To get B from C, you need A. Basically, A ⊕ B ⊕ B = A, and A ⊕ B ⊕ A = B. XORing plaintext and key locks them in a interdependent mixture such that to get back the plaintext, you have to have the key with which it was XORed (locked).

S-box substitution

In some ways, this is the heart of the algorithm. S-box substitution gives us confusion. There are eight S-boxes in total, each taking 6 input bits and giving 4 output bits. S-boxes provide DES immunity against differential cryptanalysis which I mentioned at the beginning of this article. Here’s S-box number 1.

0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15

-------------------------------------------------------------

0 | 14 4 13 1 2 15 11 8 3 10 6 12 5 9 0 7

1 | 0 15 7 4 14 2 13 1 10 6 12 11 9 5 3 8

2 | 4 1 14 8 13 6 2 11 15 12 9 7 3 10 5 0

3 | 15 12 8 2 4 9 1 7 5 11 3 14 10 0 6 13

Here’s how it works. After the XOR operation, we are left with a cryptic looking 48 bit string.

say 110010101100101111111100110111101100111010101001

Now we take this 48 bit string and divide it into 8 equal parts of 6 bits each, and input one of the 8 parts into each S box.

SB1(110101) SB2(101100) SB3(101111) SB4(111100) SB5(110111) SB6(101100) SB7(111010) SB8(101001)

Now, our S-box 1 receives 110101.

We take the first and last bit (1 and 1 in this case, coloured yellow), concatenate it to form a two bit number (1 . 1 => Binary(11)) which is 3, and look it up in the row labels of our S-box 1.

Similarly, we take the middle 4 bits (2 to 5), which in our case are 1, 0, 1 and 0, coloured blue, concatenate them to form a 4 bit number (1 . 0 . 1 . 0 => Binary(1010)) which is 10, and look up the corresponding column label in our S-box 1.

The number corresponding to row 3 and column 10 is 3, which is 0010 in 4 bit binary representation. That is the output of S-box 1 for input 110101. Similarly do this for S-box 2-8, for each of the 16 rounds of DES. The result of the 8 S-boxes (4 bits each) is combined to get a 32 bit output.

Permutation

The final step of our magic function F is a simple one-to-one permutation, taking 32 bits and returning 32 bits.

16 7 20 21

29 12 28 17

1 15 23 26

5 18 31 10

2 8 24 14

32 27 3 9

19 13 30 6

22 11 4 25

Catch your breath

I’m really too proud of this picture. Edit: Not so much after finding that K(i) => K(i+1) error.

Wake up! Do you even remember that all this was done on Ri?

Now, after the F function, which wasn’t very magical after all, returns the 32 bit output, we XOR it with Li, which gives us our new Ri+1, while the untouched Ri is simply copied to Li+1‘s place. Hence begins a new round of DES, which goes on this way for 15 more rounds.

After 16 rounds

Not much is left to be done after the 16 rounds. The two halves are concatenated, the 64 bit cipher block is then passed through our final permutation using FP vector given below, and this gives us our ciphertext. Easy.

40 8 48 16 56 24 64 32

39 7 47 15 55 23 63 31

38 6 46 14 54 22 62 30

37 5 45 13 53 21 61 29

36 4 44 12 52 20 60 28

35 3 43 11 51 19 59 27

34 2 42 10 50 18 58 26

33 1 41 9 49 17 57 25

Wrapping DES Up

So that was DES. I hope you enjoyed reading this article. I’m expecting some mistakes, technical and otherwise, so take everything with a pinch of salt. Some interesting reads are given below for those of you who wish to learn more. I realized that writing this article was a nice way of testing my own understanding of the topic, find holes in it and then study to fix those holes. As always, thank you for reading!

Further Reading